- Innovation Profs Newsletter

- Posts

- Innovation Profs - 6/27/2025

Innovation Profs - 6/27/2025

Your guide to getting the most out of generative AI tools

Welcome to Gen AI Summer School

We’re spending the summer teaching you the essentials you need to succeed in an AI-forward world.

Here’s the plan:

May 30: Intro to large language models

June 6: Multimedia tools

June 13: Guide to prompting

June 20: Building a prompt library

Today: Building Custom GPTs

July 11: Intro to reasoning models

July 18: Intro to deep research

July 26: AI ethics

Aug. 1: Implementing Gen AI in your job

Aug. 8: Implementing Gen AI at your company

Aug. 15: The road to Artificial General Intelligence

Aug. 22: Where Gen AI is headed

From a prompt library to custom GPTs

In our previous edition of Gen AI Summer School, we discussed the advantages of building a prompt library over crafting individual prompts on the go for a single use. But there’s still room for improvement: What if we find that a cluster of prompts from our library, or even just one such prompt, requires us to provide a high amount of context before we get to the request? In this case, building something like a custom GPT might be in order. A warning up front: Only paid ChatGPT users can build custom GPTs (although paid and unpaid users alike can use them).

What is a custom GPT? A custom GPT is just a custom version of ChatGPT that is tailored for a specific purpose. Wait…a custom version of ChatGPT? Doesn’t it cost hundreds of millions of dollars to train the various GPT models? How could we possibly build our own custom version of ChatGPT?

It all depends on what you mean by both “build” and “version.” When you build a custom GPT, you’re not really building anything. Instead, you’re providing additional information that gets packaged with any prompt that you pass to your GPT—it’s this larger prompt, your original prompt plus the additional information, that gets passed along to the LLM.

Think of it this way: If we follow the BRIEF template for an effective prompt — Background, Role, Instructions, Examples, and Format, we can build a custom GPT that captures the Background, Role, Examples, and Format under the hood, so to speak, and in this way all of our prompts can just be reduced down to the Instructions, i.e., the specific request we are making in our prompt. Similar, if we have a cluster of prompts from our prompt library that share a common background, we can configure a custom GPT to take all of this background information for granted.

But there’s one additional feature that makes custom GPTs so useful: We can upload additional documents to serve as part of the background information for our custom GPT. This feature proves to be particularly powerful.

An example of a custom GPT

Let’s take a look at an example of a custom GPT, which will help explain why one might think of a custom GPT as a “version” of ChatGPT. Below is a link to the AI 010 Syllabus Expert that I (Porter) created for my Drake course AI 010: Interdisciplinary Perspectives on AI.

If you click on the link, you’ll be taken to a page that looks a lot like the main ChatGPT landing page (hence, a “version” of ChatGPT):

I configured this GPT by uploading a copy of my course syllabus and instructing the GPT to only answer questions pertaining to the syllabus. Anything outside the bounds of the class is off limits:

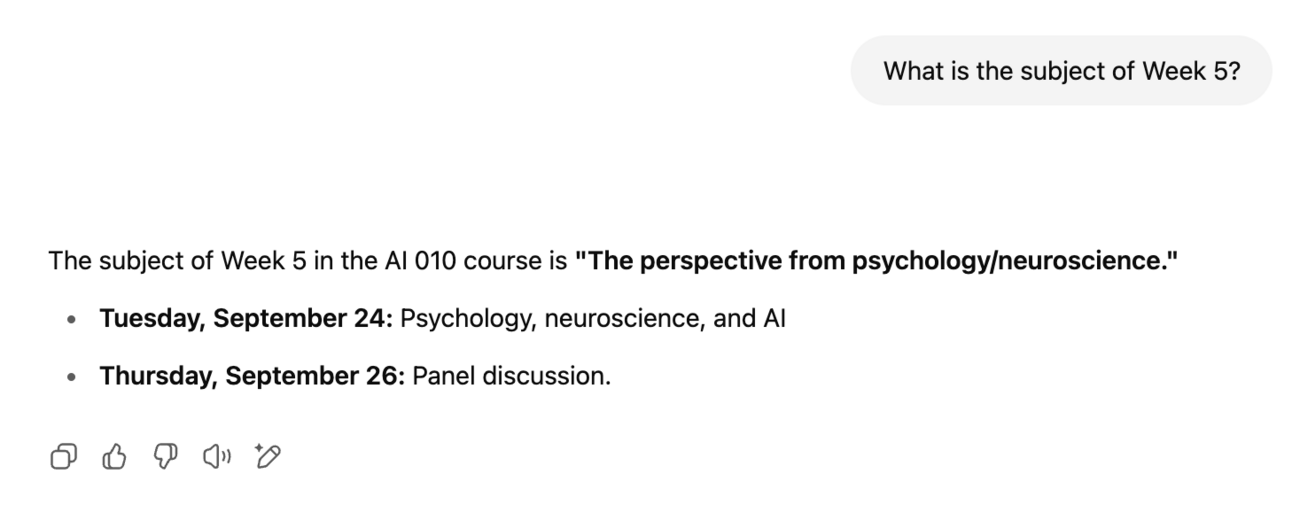

If we ask a question about the class with an answer that can be found on the syllabus, we get an answer (presumably the right one, but we should test our GPT out in advance just in case):

I share a link to this GPT with my students, and now they can easily access course information without having to open the syllabus and pore over it to answer their question (which they weren’t doing to begin with). And because unpaid ChatGPT users can still use custom GPTs, all of my students can access the Syllabus Expert.

How to build custom GPT

It’s beyond the scope of this discussion to explain all of the details of how to build a custom GPT, but I can I’ll say this much here: It’s really quite easy—you just have a discussion with the GPT Builder, a GPT configured to build GPTs. You can even try out your GPT while you’re in the process of building it. If you want the details, you should take a look at OpenAI’s guide Creating a GPT.

It’s worth mentioning that custom GPTs are specific to ChatGPT, but there are analogues of GPTs in Microsoft Copilot (called Agents) and Google Gemini (called Gems…unfortunately). Paid users of Copilot can configure their own Agents in the Copilot Studio (copilotstudio.microsoft.com), while Gemini users—even unpaid ones!—can build their own Gems at https://gemini.google.com/gems/create. You can learn about building Gems here.

Conclusion

Custom GPTs represent a natural evolution from prompt libraries—they simplify repeated tasks, streamline the user experience, and allow us to embed deep context without having to repeat ourselves. Whether you're a teacher building a course assistant, a marketer managing campaigns, or anyone working with structured prompts, custom GPTs offer a practical way to scale your prompt design efforts. As these tools become more accessible and integrated into platforms like ChatGPT, Microsoft Copilot, and Google Gemini, the line between a good prompt and a smart assistant continues to blur. Now that you know what’s possible, the next step is simply to try building one.