- Innovation Profs Newsletter

- Posts

- Innovation Profs - 7/11/2025

Innovation Profs - 7/11/2025

Your guide to getting the most out of generative AI tools

Welcome to Gen AI Summer School

We’re spending the summer teaching you the essentials you need to succeed in an AI-forward world.

Here’s the plan:

May 30: Intro to large language models

June 6: Multimedia tools

June 13: Guide to prompting

June 20: Building a prompt library

June 27: Building Custom GPTs

Today: Intro to reasoning models

July 18: Intro to deep research

July 25: AI ethics

Aug. 1: Implementing Gen AI in your job

Aug. 8: Implementing Gen AI at your company

Aug. 15: The road to Artificial General Intelligence

Aug. 22: Where Gen AI is headed

Introduction to Reasoning Models

Remember the early days of LLMs, when we were told we could get better results by telling the models to “stop and think?” That’s the idea behind reasoning models.

Traditional large-language models agressively predict the next words to say. They can appear smart but often skip the disciplined, multi-step thinking humans use for difficult problems. “Reasoning models” instead plan before they speak: they internally generate a chain-of-thought, check it, and only then deliver a concise answer.

OpenAI’s o-series, Anthropic’s Claude 4 and Google’s Gemini Pro are examples of current reasoning models.

OpenAI says its reasoning models, “execute tasks with high accuracy and precision, making them ideal for domains that would otherwise require a human expert—like math, science, engineering, financial services, and legal services.”

What reasoning models do well

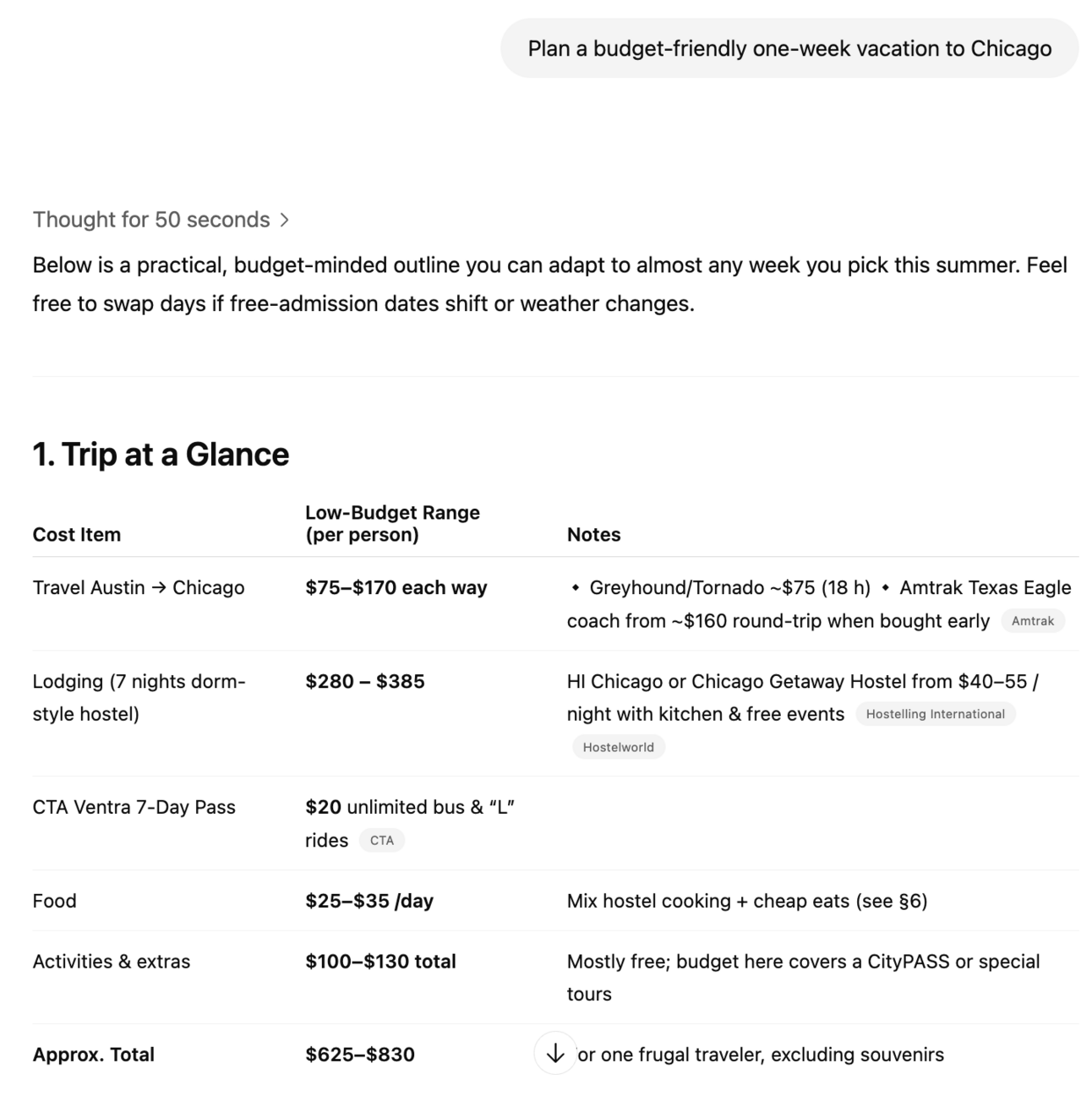

Reasoning models excel at turning complex topics into plain-language explanations. They can patiently tackle multi-step tasks such as planning a week-long vacation within a set budget.

Notice the line in the image below that says, “Thought for 50 seconds.” That is the reasoning model working through the prompt and checking information.

They breeze through hefty documents by reading and summarizing them in moments. They can invoke built-in helpers like calculators, coding sandboxes, or web lookups when you ask.

And they even interpret images, so you can drop in a busy chart or photo and say, “Explain what I’m seeing.”

Tips for better results

If you’d like a detailed walk-through, prompt the model with a request such as “Walk me through your thinking before you decide,” which nudges it to lay out its reasoning step by step.

When brevity matters, capping the reply with something like, “Answer in under 150 words,” keeps both the response and any usage costs in check.

To reduce slip-ups, ask the model to “Cite any source you rely on,” prompting it to verify information rather than improvise.

For fresh ideas, a prompt like “Give me three wildly different options first” encourages broader brainstorming before diving into details.

And when you need advice tailored to your world, anchoring the request—“Use examples that suit a small nonprofit”—helps the model ground its answer in your specific context.